Some productivity metrics are evil

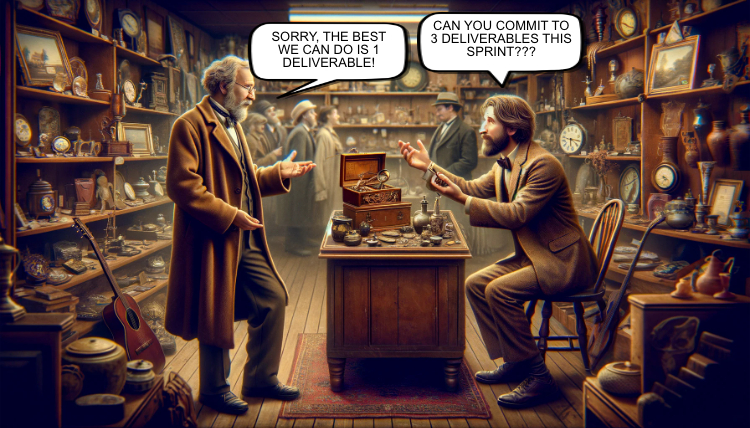

You have the company, the vision, the product, and the plan to improve it and make it more feature rich. You also have the stakeholders, who are anxious to see those new features delivered. If you’re responsible for that delivery, now you also have a problem!

You don’t want to overpromise, nor do you want to underpromise. One thing is sure: you must promise something; commitment is not optional.

This is where the problem starts. If you overpromise, you’ll probably miss every deadline, putting immense pressure on the people working on the deliverables. If you underpromise, you’re not realizing the full potential of your product teams, and you’re wasting resources.

So, you do the sensible thing. You tell the product teams to plan their sprint (or any other unit you use to measure an interval of work), committing only to the number of deliverables they are highly confident of guaranteeing. Teams will do their due diligence and ensure the number of deliverables is optimal, with maximum utilization of the team’s resources and a “quality first” mindset.

And they lived happily ever after—the end.

Well… not exactly. Unfortunately, the reality is a bit different. The story above is just one side of the medal. The other side is how you run the company and evaluate the performance of the teams and their members.

To assess performance, you need some metrics. And this is where it can get tricky. Metrics are problematic because if you’re not careful about how you set those, you can end up incentivizing rather unhealthy and unwanted behavior.

Let me use a simple example from our story at the beginning of this post to discuss “deliverables.”

Let’s say you have two teams, a blue and a yellow team. You decided to introduce a metric that shows whether the teams responsible can achieve a pre-agreed number of deliveries per sprint. As delivery is a pretty ambiguous term, you agreed to talk to both teams before each sprint, discuss the complexity of the deliverables, and see if you can decide on how many deliverables they can commit to.

After ten sprints, you look at the metrics. A blue team committed each sprint to four deliverables, achieving their goal every sprint. On the other hand, the yellow team committed each sprint to three deliverables, but they failed to meet their goal three times out of ten.

Your metrics tell you that the blue team should be rewarded, and the yellow team should have their performance evaluated. I’m oversimplifying this situation, and any sensible manager wouldn’t reward or reprimand the team based on one metric. Still, organizations often have many loosely defined metrics, which can all lead to questionable actions.

While it might be true that the blue team overperforms the yellow team, you can’t be sure. The metric used in the example leaves a lot of possibilities (and some questionable behavior) open. Here are some examples:

- The blue team is better at overselling the complexity of the deliverables, lowering the actual output they’ll commit to. The yellow team might be better at sales but not necessarily at delivery.

- The blue team decides to overlook quality (testing, covering edge cases, cleaning technical debt, etc.), optimizing for shipping as many features as possible but endangering the product long-term.

- The blue team marks some product requirements as not feasible during the discovery/refinement sessions to avoid dealing with potentially uncertain work (albeit possibly yielding high rewards for the company)

- The yellow team could’ve possibly delivered multiples of what the yellow team achieved, always trying to push themselves more each sprint. They optimized for value and set ambitious goals to advance the product further.

My examples describe the blue team as toxic, and this might be extreme, but it illustrates the point pretty well. Anecdotally, working in several toxic environments in the past, I witnessed this behavior first-hand.

Even though it’s rarely the intention of the person responsible, some metrics they introduce to their teams can be EVIL! This is how I define an evil metric.

An “EVIL METRIC” is a performance metric that, due to its design or the context in which it is applied, can lead to behaviors contrary to the best interests of the team, product, or company.

It promotes short-term gains at the expense of long-term success, quality, and team morale. It can lead to toxic behaviors such as gaming the system, overselling, underdelivering, neglecting quality, and avoiding challenging but potentially rewarding work.

How to avoid implementing evil metrics?

Here are some questions you can ask yourself when you’re thinking about the metrics you want to use to assess the performance of your team(s):

- What behaviors will this metric incentivize? Are there any negative behaviors it might inadvertently promote?

- Does this metric favor quantity over quality, or does it balance both?

- Is the metric understandable, and can team members see how their actions directly influence it?

- How easy is it to game or manipulate this metric? What safeguards are in place to prevent this?

- Does the metric fairly evaluate all team members, considering the diversity of roles and tasks?

- How will this metric be used in conjunction with rewards and recognition? Will it encourage teamwork and collaboration or foster unhealthy competition?

Final thoughts

Ensure the metrics you use promote fair play and are not prone to manipulation. Discuss the metrics with the team and gather feedback. You should never use metrics that are not transparent to those who will be evaluated based on them.

Reporting on progress and communicating with stakeholders don’t have to overlap with evaluating your team’s productivity. There is a balance, but it requires a lot of work to establish. All the good things do! Happy metric setting, and don’t be evil!